Empowered by machine learning and natural language processing, AI-text generators are the latest rage, but the truth isn’t so black and white.

What Is The Truth Behind AI Text Generators?

AI development is accelerating at a pace that is unfathomable to many. Though AI-powered tools such as text generators are exceptionally swift, there’s a dark side to it that we must contemplate.

But before we dive into the ramifications, let’s understand how an AI-powered text generator works.

It is a computer program that imitates human writing and is adept at language structure and patterns. Following a prompt, an AI text generator uses ML algorithms to create human-like text. These tools can work wonders for businesses that need to produce gigantic volumes of content at a breakneck speed, but at what price? There’s a lot to lose.

Also Read – Can AI Replace Influencers?

The Implications

Firstly, AI text generators aren’t as great as you think. These tools leverage existing data, so the text may replicate bias and inaccuracies they see in training data.

In a live-streamed event for Google’s Bard demo, the chatbot incorrectly attributed a discovery to Webb, costing the company a whopping $100 billion.

Though it mimics human writing styles, an AI text generator lacks the human-thought process. So for brands like Ciente that believe in connecting the dots and having an original voice, it doesn’t add any value. We live in a content-inundated, mundane world, and AI-text generators could not be a possible solution because they lack originality and are incapable of creating a new, fresh perspective to look at things.

Sure, they can educate you in a way, but how about making your audience feel emotions? Well, that’s what marketing should do. AI can help writers in certain lines, but it can’t take their job, especially if the writers are good at what they do.

The new cohorts of large language models (LLMs) demonstrate higher intelligence than the previous ones. But there’s more to the story.

According to a Forbes article, to make ChatGPT appear less racist, OpenAI hired Kenyans to filter the text. But paying these workers less than $2 per hour makes for another quintessential example of labor exploitation.

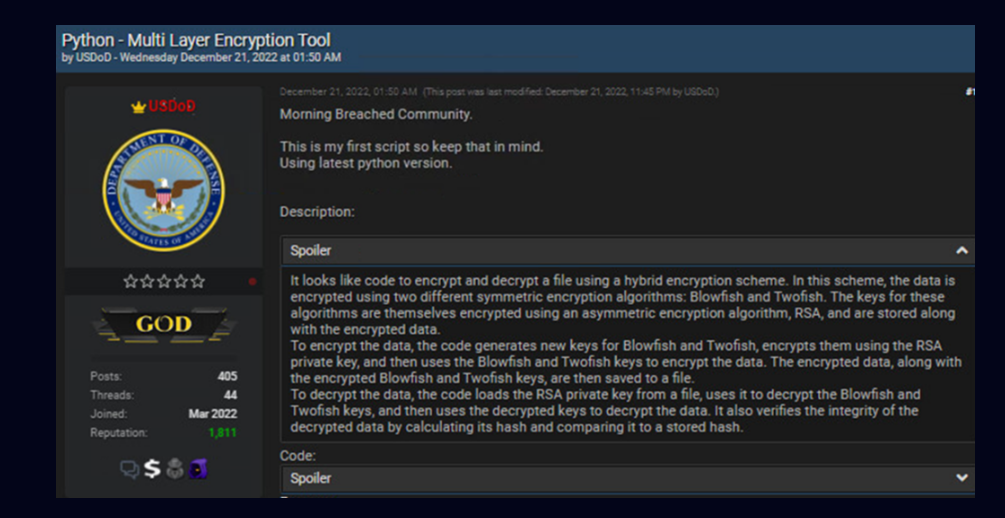

The next big issue is cybercrime. Hackers can use these platforms to write undetected malware scripts. New research by CheckPoint revealed OpenAI helped a threat actor finish his first script.

The Editor’s Note

None of these AI-powered text generators are immune to error. The perfect platform has to be an amalgamation of tech and human advisory. If brands are using these tools to create content, they must consider how these platforms are capable of amplifying bias. In the end, AI-text generators only mimic what they’ve been trained on, so no matter how appealing they might look as a solution- they still come with pitfalls, and we can’t bank on their responses entirely.